In the world of artificial intelligence (AI) and machine learning (ML), the quality of the dataset used for training models can make or break a project. Choosing the right dataset is a crucial step that can determine whether your model succeeds or fails. An off-the-shelf dataset - ready-made and available for immediate use - may seem like an easy solution for developers and researchers. However, the challenge lies in selecting the best datasets for AI projects that align with your specific needs. With so many options available, how do you make the right choice?

In this article, we’ll explore the six crucial factors to consider when selecting the right off-the-shelf dataset for your AI or ML project. By keeping these key considerations in mind, you can make informed decisions that contribute to better-performing models.

Key Takeaways

- Data Relevance: Ensure the dataset aligns with your industry and specific problem.

- Data Quality: Choose clean, well-structured data to avoid inaccuracies.

- Dataset Size: Choose a dataset large enough for robust model training but scalable for future needs.

- Licensing: Verify licensing terms to avoid legal issues.

- Bias and Diversity: Ensure datasets are diverse and free from biases that could skew your results.

- Update Frequency: Select datasets that are regularly updated to maintain relevance.

1. Data Relevance and Domain Suitability

One of the most important considerations in off-the-shelf dataset selection is data relevance. A dataset must be appropriate for the specific problem or industry you're working with. For example, a dataset created for image recognition might not be suitable for natural language processing (NLP) tasks. Ensuring your dataset aligns with your goals helps in building models that produce meaningful results.

- Metadata and Documentation: Always evaluate the metadata and documentation that accompany the dataset. This will help you understand its context and ensure it fits the use case. The documentation will also indicate any assumptions made during data collection, and potential limitations or biases that might be present.

Examples of Domain-Specific Datasets:

- Healthcare: Datasets with medical records, image analysis for radiology, or patient data for predictive modeling.

- Finance: Market data, stock prices, or transaction records, often used for algorithmic trading or fraud detection.

- Natural Language Processing (NLP): Text datasets such as sentiment analysis, language translation, or chatbot training datasets.

2. Data Quality and Completeness

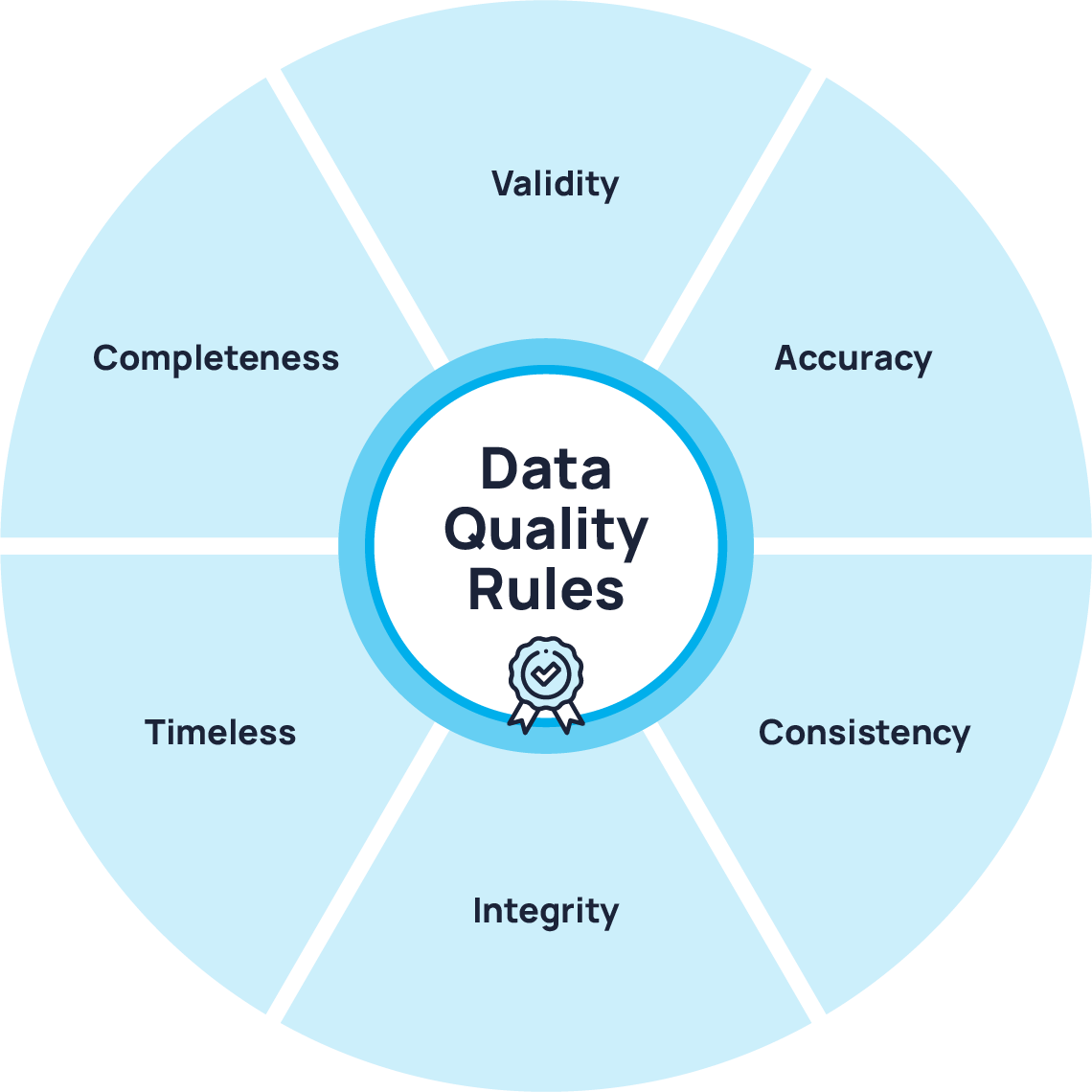

The quality of your dataset is just as important as its relevance. Low-quality data can lead to poor model performance and misleading results. When evaluating a dataset, you should consider:

- Cleanliness: The dataset must be free from errors and inconsistencies. Check for missing values and inaccurate labels. Incomplete data can cause models to learn wrong patterns.

- Structure: The dataset should be well-structured and organized. This includes proper labeling, consistent formatting, and an easily understandable schema.

In fact, a report from Accenture found that 70% of machine learning projects fail due to poor data quality. By focusing on clean, structured data, you ensure that the training process is more effective and your final model is more robust.

3. Dataset Size and Scalability

When selecting a dataset, consider the volume of data it provides. The size of the dataset directly impacts how well your model can generalize to real-world scenarios. However, larger datasets come with their own set of challenges, such as higher computational costs and longer training times.

- Balancing Size with Resources: Ensure that your computational resources (e.g., GPU/CPU power, memory, storage) are sufficient for the dataset’s size. Overloading your resources may lead to poor performance or model failure.

- Scalability: As your project grows or the model needs to be improved, you may need to incorporate more data. Choose datasets that are easily scalable or that can be updated without significant effort.

Considerations for Scalability:

- Cloud platforms like AWS, Google Cloud, or Azure can help manage large datasets and scale resources as needed.

- Some datasets are specifically designed for big data applications, offering streamlined storage solutions for large-scale machine learning tasks.

As your dataset grows, ensure that your infrastructure can handle increased storage and processing demands. Planning for scalability from the outset will help maintain model performance and adaptability over time.

4. Licensing and Usage Restrictions

Before you start using an off-the-shelf dataset, it’s crucial to understand the licensing terms. Data may be freely available for personal use, but commercial or public use could be restricted.

This table provides a clear comparison between open-source and proprietary datasets, helping to highlight the advantages and limitations of each option when choosing a dataset for your AI and machine learning projects.

5. Bias and Diversity in Data

AI models can only be as unbiased as the data used to train them. It’s important to ensure that the dataset is diverse and free from biases that might skew the model’s outputs. Biased datasets can lead to unfair, discriminatory, or unethical results in areas like hiring, criminal justice, or healthcare.

- Demographic Diversity: Ensure that the dataset represents different demographic groups, including age, gender, ethnicity, and socioeconomic status.

- Contextual Diversity: The dataset should cover different scenarios and contexts that might be encountered in the real world. A lack of diversity can lead to overfitting, where the model performs well on the training data but fails in real-world applications.

Addressing Bias:

- Preprocessing: Use data augmentation techniques to increase diversity.

- Bias Detection: Use fairness auditing tools like AI Fairness 360 to detect and mitigate biases before using the dataset.

6. Update Frequency and Maintenance

Data can become outdated, and outdated data can severely impact the performance of AI models. For applications where accuracy is critical (e.g., fraud detection or financial forecasting), regularly updated datasets are essential.

- Dynamic Datasets: If your application requires constant updates (e.g., stock market predictions), select datasets that are regularly refreshed to stay relevant.

- Data Maintenance: Consider how the dataset will be maintained. If the dataset is sourced from third parties, ensure that it is consistently updated and maintained to avoid using stale data.

Strategies for Keeping Datasets Current:

- Version Control: Use versioning to track updates to datasets.

- Automated Data Pipelines: Set up pipelines to automatically fetch and incorporate new data into your system.

Recent research by McKinsey & Company found that companies using dynamic datasets and real-time data analytics are three times more likely to make faster and more accurate business decisions than their competitors. This highlights the advantage of using updated and timely data for business-critical AI applications.

Making the Right Dataset Choice for Optimal Performance

Selecting the right off-the-shelf dataset for your AI and ML projects is crucial for building accurate, reliable models. By carefully evaluating the six factors outlined above - data relevance, quality, size, licensing, bias, and update frequency - you can choose a dataset that not only suits your needs but also enhances your project’s long-term success. Whether you’re working on off-the-shelf data for AI models or creating a more customized solution, making the right decision early on is key to project success.

As you embark on your next AI or machine learning project, take a strategic approach to dataset evaluation. Assess the relevance, quality, and scalability of datasets before you begin, and ensure that the data aligns with your project’s goals. Informed decisions today can lead to more successful, reliable models tomorrow.

FAQs

What are the three types of datasets?

The three main types of datasets in machine learning are training, validation, and test datasets. The training dataset is used to teach the model patterns in the data, the validation dataset helps fine-tune hyperparameters and prevent overfitting, and the test dataset evaluates the model’s performance

How do I know if a dataset is suitable for my project?

Ensure that the dataset matches your industry and specific use case. Check its metadata, documentation, and sample data to evaluate its relevance.

How often should I update my dataset?

The frequency of updates depends on your project. For dynamic applications like fraud detection, updates should be frequent, whereas for static applications, annual updates may suffice.